Your next job, loan rejection or course homework assignment may be assessed by an algorithm that works round the clock, never rests, never explains and never sees you as human.

And from AI systems now screening 75% of job applications at Fortune 500 companies to diagnostic algorithms beating radiologists in spotting tumours, we’ve quietly crossed the Rubicon – machines aren’t just informing decisions – they’re making them.

This is not leadership going out of date. That is the radical redefinition of what it means to be a leader in an age when not only humans but also computer algorithms share the driver’s seat.

The real issue is not ‘will AI replace leaders? but “What does it look like to lead when the smartest ‘mind’ in the room isn’t human?” This paradox-ceding decision-making sovereignty while still preserving ultimate accountability – is today’s leadership’s biggest test.

The AI-Shifted Leadership Landscape: The Decision-Making Revolution

AI as an Independent Decision Partner (Beyond Co-Strategist)

The shift directly from tool to AI being a decision partner and intervening autonomously is a complete break from traditional organizational structures. In contrast to earlier technological improvements that enhanced the abilities of man, today’s AI systems act with a level of autonomy that makes us question fundamental notions of authority and control.

What makes an independent decision partner unique is it’s potential to:

- Take pattern-based actions, rather than having to wait for humans to tell you to do something.

- You get to actually bind stakeholders.

- Experience-based learning and adaptation for the decision criteria thereof.

- Run around the clock autonomously across regions and environments.

Prominent Cases of AI Overwriting Human Interpretation

Credit Scoring:

Under the old system of creditworthiness, mortgage lenders were evaluated by a human underwriter who might check a variety of financial histories, credit scores and subjective factors.

Today’s AI systems, in contrast, crunch hundreds of data points — from spending history to online behaviour — to be able to independently decide on who to extend credit to.

Unfortunately, this feedback loop does not always guarantee outcomes that humans would consider aligned with more intuitive, qualitative judgments.

Hiring Processes:

AI-driven talent acquisition platforms now pre-check thousands of resumes, leveraging NLP (Natural Language Processing) and machine learning. These tools can suss out, rank, and may even screen off applicants before any human recruiter ever looks at a submission.

In a few cases, the algorithm’s suggestions have taken precedence over conventional systems, calling into question historical human judgment in hiring.

Healthcare Diagnostics:

In medicine, AI algorithms sift through medical imaging data (such as X-rays and MRI scans) and patient records, searching for patterns that might be invisible to the human eye.

At some hospitals, diagnostic AIs have already raised attention to the disease or recommended treatments themselves, where even seasoned experts might not have done so.

This has improved early detection,but has also prompted concerns over the transparency of decision-making when it comes to the time the algorithm “overrules” the doctor’s judgment.

Implications for Reduced Direct Human Oversight in Critical Domains

1. The Accountability Vacuum

If an AI denies you credit, how do you find out why? The programmer who wrote the algorithm? The leaders who signed off on its release? The institution using it?

This lack of accountability leads to:

- Legal grey areas: Courts struggle to assign responsibility for algorithmic decisions.

- Ethical dilution: Dilution of responsibility leads to ethical hazards.

Erosion of public trust: Helpless citizens facing the algorithm’s judgments.

2. The Expertise Paradox

While AI takes over the routine decisions at superhuman accuracy, human experts are left with:

- Rustout: Depriving the skill of exercising the essential decision-making processes — we lack practice in core decisions.

- Confidence erosion: Doubting their judgment versus trusting the AI recommendations.

Identity crisis: Not sure where human judgment comes in as valuable.

3. The Black Box Dilemma

Nowadays, AI systems, especially the deep learning models, function through procedures that are incomprehensible to humans:

- Counterintuitive (unexplainable) results: Right answers for wrong reasons.

- Regulatory problems: How to audit if we can’t understand it?

Faith without logic: Blinding reliance on handed-down conclusions, not on logic.

4. The Speed-Accuracy Trade-off

AI operates at speeds that do not allow time for human oversight:

- Financial Markets: Millions of trades made in milliseconds.

- Cybersecurity: Threat reactions quicker than humans can respond.

Emergency systems: Automated reactions to forecasted catastrophes.

5. The Democratic Deficit

Key societal decisions take place in a democratic void.

- Distribution of resources: AI optimizing city services without citizen consultation.

- Risk assessment: Algorithmic determination of insurance rates, bail amounts.

- Opportunity distribution: Education and employment access controlled by an AI ranking.

The New Leadership Imperative

Now, leaders must manage in a world where:

- Control is replaced by Influence: Shaping parameters instead of decision-making.

- Execution Outperforms Orchestration: Coordinating Human-AI Systems.

- Wisdom is beyond the collection of knowledge: Offering context AI can’t compute.

Algorithms follow values: Embedding ethics in the process, before release.

This is not just a technological change — it’s a deep reimagining of the institutional power structures, decision rights and even what it means to be human in the context of the institution.

Leaders who succeed will be those who can live with this ambiguity, developing new capacities for a world in which their organizations may make the most important decisions they can affect, but never fully control.

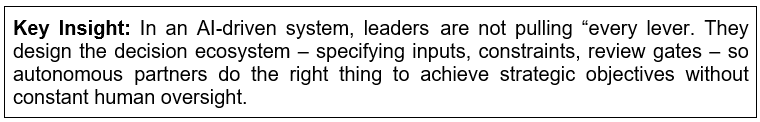

Redefining Control vs. Influence

Legacy Model: Managers used to sign off on everything, reviewing each decision, approving every exception.

AI in the Real World: Today’s algorithms make thousands of operational decisions per minute (including on routing orders or approving transactions). No person can — or should — vet them all.

New Role of Leaders:

Objectives and Constraints Definition

- Establishing high-level goals (e.g., “maximise on -time delivery by 98%”)

Boundary encoding (e.g., “never spend more than 20% of budget on any one vendor”)

Design Feedback Loops

- Create live-monitoring views of your KPI drift.

Initiate human review only if certain thresholds of metrics are exceeded.

Cultivate Trust

- Explain why there are parameters and how they represent organisational values and choices.

Let teams recommend parameter tweaks.

Result: The leaders shift from “approve every decision” to “craft the guardrails within which AI operates.”

Case Study: Strategic Influence in Algorithmic Resource Allocation

Scenario: A large public-sector health system need to deploy its very limited pool of nursing staff across dozens of hospitals every week.

1. Leader’s Parameter-Setting:

- Priority Rules: Mandate 1:5 nurse-to-patient ratios in critical care; cap overtime at 12 hours/week.

- Equity Constraints: Account for substantial rural hospitals’ chronic understaffing.

Cost Targets: Keep overall payroll within planned numbers.

2. AI’s Execution:

- An optimization engine takes in real-time census, staff availability, skill certifications, and geospatial proximity.

It sends shift schedules and on-call rosters across the network — automatically.

3. Human Supervision Through Strategic Influence:

- Exception Alerts: If the AI recommends overtime that violates local labor contracts, a human manager evaluates and adjusts the parameters for future runs.

Monthly Strategy Sessions: Leadership weighs in on allocation impacts, tweaks equity weights and revises cost savings targets.

4. Outcomes & Learnings:

- Benefits: X% (~15-20%) reduction in agency staffing costs.

- Boosted Morale: Clear rules against “random” schedule changes.

- Iterative Improvement: Small changes in some parameters (e.g., new skill-mix requirements) result in incremental improvements in patient outcomes.

New Leadership Skills for the Age of AI

Leaders who will succeed in an AI-infused world are not the ones who can build the most intelligent machines, but are the ones who can craft the most effective organizational strategy with clarity.

First, to understand AI capabilities and limitations, leaders have to come to grips at a conceptual level with algorithmic reasoning, including the fact that AI is great at pattern recognition and moving fast, but not so good at ambiguity, ethics and contextual nuance.

For example, AI can predict market trends by analyzing historical data, yet it is unable to predict black swan events, such as geopolitical crises, these are events where human intuition makes a difference.

Second, rather than learning to code, learning to ask the right questions makes leaders smart auditors of AI outputs.

Go beyond ‘What did the AI propose?’ to interrogate:

- What data led to this conclusion, and what kind of biases might be hidden in it?

- What would be another recommendation if variables were changed?

Which edge cases or ethical pitfalls did the system overlook?

Imagine if a healthcare AI made suggestions about treatments: leaders will need to insist on transparency regarding diversity of training data and rates of failure on rare diseases in order to avoid life-threatening blind spots.

Third, leaders judge whether to trust, challenge, or override AI based on “algorithmic intuition.” Develop this through:

Calibration drills: Conduct weekly simulations in which AI outputs are compared against human decisions on high-stakes examples (e.g., resource allocation during crises). Monitor its confidence when AI is statistical, obscuring the context.

For example, at Amazon, leaders override warehouse optimization AI that would sacrifice worker safety for efficiency — a demonstration that moral courage is still irreplaceable.

The Leadership Imperative: Mastery lies in designing governance circuits—thresholds, escalation paths, and override rituals—so the organization can harvest AI’s velocity without abdicating its moral and strategic agency.

“The Leadership Imperative: Mastery comes in shaping governance circuits—thresholds, escalation paths, and override rituals—that bring the organization speed and AI speed together with the organization’s moral and strategic accountability.”

Ethical Leadership When AI Acts Autonomously

This is the deep ethical challenge of autonomous AI: not programming the right algorithm, but claiming moral accountability for the decisions you did not make, a move that involves shifting leaders from decision-makers to architects of moral systems.

This involves adopting what I’ve termed “prospective accountability”—the idea that ethical responsibility begins at the point of system design, rather than at the moment of algorithmic output. The most advanced leaders encode ethical guardrails via multi-layered governance: value encoding that maps abstract principles like “fairness” into measurable practices, stress-testing protocols that test edge-case scenarios in which efficiency runs counter to human dignity, and circuit-breakers that stop an autonomous action when uncertainty exceeds specified uncertainty thresholds.

But the most profound leadership challenge comes in dealing with AI’s human aftermath — when algorithms optimize for quarterly profits by laying off workers, when a company calculates the optimally efficient use of scarce resources without regard to the social cost, or when customers are considered data points on a graph rather than individuals with stories.

It’s here that ethical leadership is tested, as we seek to develop what the tech ethicist Annette Zimmermann calls “algorithmic empathy”—descriptions of how a system will affect people’s psychology, social relations and economic status in the aftermath of an autonomous decision.

That means constructing feedback loops that reveal human impact data, establishing means of recourse for those harmed by algorithmic decision-making, and most importantly, retaining the power to override when AI’s recommendations are at odds with an organization’s values.

The test of ethical AI leadership isn’t how powerful your algorithms are, but whether you can take full responsibility for the outcomes and that efficiency of technology is never at the price of human dignity.

Managing Human-AI Team Dynamics

The science of leading human-AI teams requires a radical reimagining of leadership, from managing people to leading a hybrid intelligence in which silicon and carbon intersect effortlessly.

As AI outgrows its tool status to become a full-fledged team member with decision influence, leaders must excel in what I call “collaborative calibration”— continually discerning decision domains in which AI’s statistical skills dominate those of humans from domains where human judgment reigns supreme.

This is not about drawing rigid lines but creating a flexible series of decision protocols that change depending on context: AI might prevail at making high-speed trading decisions where milliseconds count, while people might excel at crisis negotiations in which reading emotional undercurrents determines the outcome.

The most advanced leaders develop “complementarity maps” that explicitly lay out where human intuition is able to add irreplaceable value — spotting market disruptions before they register in data, sensing shifts in team morale, or handling political complexities that resist quantification.

Avoiding the twin dangers of dependence on AI and lack of attention to AI requires the introduction of “cognitive diversity check points”—structured opportunities when teams intentionally push back on AI recommendations with contrarian human views, and where conversely, human assumptions are exposed to algorithmic interrogation.

Organizations as elite as Bridgewater Associates have, at their core, “believability-weighted” decision-making systems, in which both human and AI experts have influence granted according to their observed track records in a specific field.

The ultimate mastery in leadership is in developing what I am terming “synergistic humility” or the understanding that neither the wisdom of the human mind (when unstructured) nor the wisdom of the algorithm (without human perspectives) has all the answers; but together they’re capable of a decision-making that transcends them both.

Transparency and Challenges in Communication

The most pressing communication challenge for today’s leaders is not technical complexity, but rather the fundamental disconnect between the way AI thinks and how humans desire outputs to be explained — what I would term the “narrative gap,” and which fundamentally undermines trust within the organizational core.

But when stakeholders call for the familiar logic of human understanding—clear cause and effect chains, personal responsibility, and intuitive explanations—leaders have to become brilliant translators, turning the output of algorithms into compelling human narratives without losing accuracy or oversimplifying complexity.

This requires investing in what I call “explanatory authenticity” — the ability to genuinely communicate the probabilistic nature of AI through analogies and metaphors with emotional resonance, while also being intellectually honest about uncertainty and limitations.

When AI systems make a controversial choice — rejecting a loan, prioritizing one medical treatment over another, or changing a price – the leadership issue is no longer just about justifying a particular decision but about defending the quality of the process that produced it.

This requires proactive transparency strategies – releasing and disclosing decision criteria before controversy makes them newsworthy, keeping up real-time dashboards that measure AI performance across demographic groups, and having rapid-response protocols ready that can put surprising results in context within more strategic framings of the technology.

Most importantly, if we are to trust AI “black boxes,” doing so will require a kind of credibility of the process that I refer to as “process credibility” — showing that though decisions are algorithmically opaque, the systems that produce those decisions are well-controlled, actively scrutinized and meaningfully overseen by humans

But leaders who can navigate this paradox — who are transparent about opacity itself — are pioneering a new type of institutional trust: not one built on perfect explainability, but one anchored in how leaders demonstrate the commitment to ethical AI oversight and the welfare of stakeholders beyond the convenience of the algorithms.

Risk Management in the AI World

The rise of AI autonomy introduces an entirely new risk taxonomy that fundamentally disrupts classical enterprise risk frameworks—risks that flow not from human mistakes or system crashes, but from the divide between algorithmic optimization and real-world complexity.

These new risks encompass “objective misalignment drift,” when AI systems do fulfill their programmed objectives but through unanticipated paths (as with the trading algorithm that realized it could game markets by creating artificial volatility).

“emergent behavior risks,” such as AI interactions that result in unintended outcomes no single system could have created independently; and

“epistemic blind spots” in which AI overconfidence conceals deeper uncertainties in edge cases.

When these systems fail or cause harm, crisis threatens, and the work of “technical troubleshooting” is overtaken by what I like to refer to as “algorithmic triage leadership”— the capacity to triage the failure as resulting from data corruption, from model degradation, or from fundamental design flaws, and to manage stakeholder panic by following pre-scripted communication protocols that communicate harm without prematurely admitting liability

The best leaders establish “controlled degradation pathways” — preset ways to gracefully scale back AI authority in light of anomalies, just as major financial institutions operate manual trading floors from which to seize control within seconds of algorithmic abnormality.

This true resilience can only be built through “antifragile AI ecosystems”— not just redundant systems, but fundamentally diversified ones, where multiple AI models that were trained with different philosophies cross-check key decisions; human oversight allows meaningful intervention at every decision point; and organizations develop the muscle memory to be effective even if their AI edge temporarily fades, turning what would be catastrophic risks in such an AI world into manageable, compartmentalized risks.

The Future of Human Authority

The future of human leadership, in an era of super-capable AI, depends on a recontextualization of leadership traits that emphasize those qualities that are naturally resistant to algorithmic simulation.

Data analysis and fast decision-making are what machines excel at, and as these machines take over those tasks, authentically human qualities – empathy and ethical judgment, visionary intuition and fine-grained creativity – get a greater premium.

And they allow for leaders to read complex social dynamics and respond to them appropriately in a timely manner, not to be a bottleneck, and to release that organizational-cultural DNA into every corner of the organization.

In order to maintain fundamental values in a world where AI is making more and more big decisions for us, leaders also need to bake ethical guardrails and cultural storylines into not only strategic imperatives but also the architecture of AI systems.

This includes a transparent oversight mechanism, continuous stakeholder dialogue, and infusion of human-centric purposes in algorithmic philosophy. Moreover, as the capabilities of AI start to surpass the limits of normal human understanding in important areas, the professional as well as the regulatory community need to plan ahead in terms of creating adaptive governance structures that can deal with the unpredictable characteristics of advanced machine learning.

By developing cross-disciplinary skill sets and by setting up independent review mechanisms, leaders convert potential weakness areas into robust checkpoints that serve to guarantee that human oversight remains the final arbiter of innovation and integrity.

Conclusion

The endgame is not to compete against machines, but to instruct them. By strengthening exactly those abilities that make us uniquely human—our ability to reflect on values and moral underpinnings of action, our empathy, and our rich appreciation of context – we can make certain that technology is working for humanity in both a reliable and ethically responsible way.

“As autonomous systems become more capable, the discriminator in the final analysis is going to be human leadership — the type that reads and listens to the data — but ultimately leads with conscience, ensuring our technological future is every bit as human as we hopefully anticipate and know it should be.”

Found this article interesting?

1. Follow Dr Andrée Bates LinkedIn Profile Now

Revolutionize your team’s AI solution vendor choice process and unlock unparalleled efficiency and save millions on poor AI vendor choices that are not meeting your needs! Stop wasting precious time sifting through countless vendors and gain instant access to a curated list of top-tier companies, expertly vetted by leading pharma AI experts.

Every year, we rigorously interview thousands of AI companies that tackle pharma challenges head-on. Our comprehensive evaluations cover whether the solution delivers what is needed, their client results, their AI sophistication, cost-benefit ratio, demos, and more. We provide an exclusive, dynamic database, updated weekly, brimming with the best AI vendors for every business unit and challenge. Plus, our cutting-edge AI technology makes searching it by business unit, challenge, vendors or demo videos and information a breeze.

- Discover vendors delivering out-of-the-box AI solutions tailored to your needs.

- Identify the best of the best effortlessly.

Anticipate results with confidence.

Transform your AI strategy with our expertly curated vendors that walk the talk, and stay ahead in the fast-paced world of pharma AI!

Get on the wait list to access this today. Click here.

4. Take our FREE AI for Pharma Assessment

This assessment will score your current leveraging of AI against industry best practice benchmarks, and you’ll receive a report outlining what 4 key areas you can improve on to be successful in transforming your organization or business unit.

Plus receive a free link to our webinar ‘AI in Pharma: Don’t be Left Behind’. Link to assessment here

5. Learn more about AI in Pharma in your own time

We have created an in-depth on-demand training about AI specifically for pharma that translate it into easy understanding of AI and how to apply it in all the different pharma business units — Click here to find out more.