Case Study:

Using Large Language Models (LLMs) like ChatGPT to Speed Up Medical Literature Monitoring and Insights

How Large Language Models are helping medical affairs teams increase productivity and reduce medical literature monitoring time

The fastest growing bodies of content—in both volume and complexity—are in life sciences and healthcare. Analyzing this content (including clinical studies, publications, guidelines and more) and for medical insights will drive better decisions.

The Client

The client was spending significant time keeping up to date with monitoring and evaluating new medical content. This was a very resource-intense activity and they wanted a way to automate part of this.

The Solution

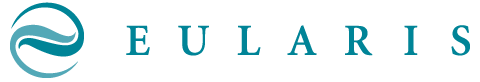

Using data-driven Natural Language Intelligence (including LLMs) to combine scale and flexibility with contextual understanding, and precision to provide content intelligence for deeper understanding and efficiency.

The Outcome

- Significant Increase in productivity (average from client estimate following implementation is 1000%)

- Significant decrease in literature monitoring time (average from client estimate following implementation is 82-92%)

- Vastly improved tracking to support portfolio products

To achieve these kinds of results, contact Eularis today.

Latest News

Read our latest blogs here.

Competing and Winning Using AI in Healthcare

I keep finding disruptive AI that solves healthcare marketers’ problems and delivers real ROI in the solutions. But the companies offering them have only 1

AI-Powered Transformation in Pharma Regulatory Affairs

One of the biggest hidden tax on pharmaceutical innovation today is the burden of global regulatory drag. We work in a world where a single

Why Companies That Wait for AI to Mature Are Falling Behind

AI is not just transforming industries; it is also transforming the way we compete. What used to be a luxury is now table stakes, forcing