Quietly, every day, in boardrooms across Silicon Valley to Singapore, a new wave of artificial intelligence systems are making thousands of decisions that shape our lives in fundamental ways — eating habits, mortgage decisions, who gets a loan, who is hired, how long a prison sentence should be, whether this medical scan signifies cancer, which students are admitted into prestige universities.

But inside this amazing technical breakthrough lies a worrying reality: these systems, which are trained on data sets that mimic our world’s historical poor behaviour, are not just reflecting these biases; they are magnifying them at an unparalleled machine pace. Any bias in data gets magnified by AI.

When an employment algorithm categorically down-ranks candidates with common female names, when facial recognition struggles to recognize darker skin tones, to predictive policing tools that only serve to reinforce discriminatory enforcement patterns, we see first-hand how the promise of unbiased, data-driven decision-making is all too rare and can turn into a sophisticated tool for perpetuating the status quo.

That is the reason diversity, equity and inclusion in AI development has gone from a moral nicety to an engineering necessity — because biased AI isn’t just an ethical issue, it’s technically flawed, creating systems that catastrophically miss for whole populations and even end up sabotaging the very goals for which they were put in place.

As we reach this crossroads, the way ahead requires more than good intentions, it demands a fundamental reimagining of how we build, roll out and govern AI systems, and that we recast equity not as an afterthought, but as the foundational principle that ensures that the world’s most powerful technologies don’t just serve the elite, but everyone effectively.

Understanding Bias in AI Systems

The Nature and Sources of AI Bias

The bias in AI isn’t a flaw — it’s an example of a systemic inheritance of human society’s flaws. For though human discrimination comes from intent or ignorance, algorithmic bias works in four pernicious, invisible ways:

1. Historical Bias:

The past haunts the present. When credit algorithms are trained on decades of loan data, they incorporate redlining policies, which excluded Black neighbourhoods from the banking system. The algorithm finds “risk patterns” where society engineered exclusion. This isn’t simply flawed data — it is automation of injustice with little consideration of how much our present is mortgaging its future to pay for its past.

2. Representation Bias:

The tyranny of the majority. A face ID system trained mostly on light-skinned male faces performs appallingly for dark-skinned women (error rates of up to 35% in MIT tests). This is not a “glitch” — it is demographic erasure. AI serves as a means of exclusion, essentially, when models only account for minorities as anomalies, not as important stakeholders in the training data.

3. Measurement Bias:

The illusion of objectivity. When an AI determines whether you’re a good candidate for a job by monitoring eye contact in a video interview — a capability some hiring tools boast — it’s got a Western bias coded into this measure of “professionalism.” Deaf candidates or unconventional thinkers fail by default. This turns cultural preferences into algorithmic discrimination.

4. Algorithmic Bias:

The math of inequity. Any optimization objective of “efficiency” is simultaneously an objective to reduce fairness. So-called predictive policing algorithms that mark neighbourhoods as “high risk” based on past arrest data produce feedback loops: Over-policing → more arrests → bias gets worse. The algorithm didn’t invent bias; it weaponized statistical correlation.

These biases do not just echo inequality of opportunity — they compound and then multiply it:

- A biased medical diagnostic tool not only misdiagnoses — it perpetuates generations of distrust in health care systems.

A hiring algorithm biased against people doesn’t just reject some applicants — it alters the shape of industry talent pipelines for generations to come.

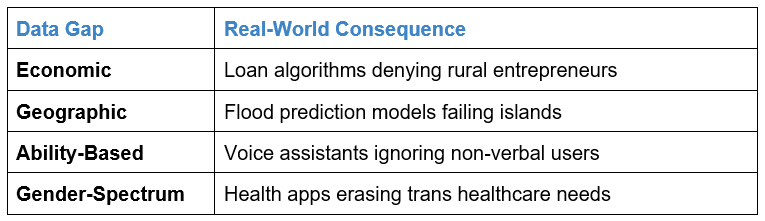

Data Challenges: The Poisoned Wellspring

Data is not neutral — it’s a cultural artifact that reflects our collective blind spots. The foundation is corrupted by three cracks:

Sampling Bias: The Unseen Exclusion

- When AI in health is trained on data from privileged urban hospitals, it fails the underprivileged rural with a lack of access.

The deeper flaw: Many samples do not capture edge cases where societal vulnerability is greatest (e.g., indigenous populations with different genetic markers).

Labelling Bias: Annotating With Prejudice

- Content moderation tools that detect “hate speech” in AAVE (African American Vernacular English) but not white supremacist dog whistles.

The hidden cost: Low-wage labellers (often in the global south) make culturally detached decisions, embedding colonial perspectives into data sets.

The Representation Crisis: When Data Ignores Humanity

The Core Paradox: We want “representative data,” but we don’t acknowledge that marginalized communities often have very good reasons not to quite trust that data will be collected “respectfully” (e.g., Indigenous groups refuse to be part of genetic research due to previous exploitation).

The Unspoken Truth:

Bias is not an “error” to be corrected — it is the product of tech’s epistemological arrogance. We have constructed systems that are predicated on the notion that:

- Every aspect of being human can be measured

- More data = more understanding

- Statistical truths reflect the facts of life

This is why your “unbiased” mortgage algorithm remains so racist: It confuses data exhaust (digital spoor of systemic inequality) with empirical reality.

The Path Forward:

The mistake is to think that only technical solutions matter to solve bias. We need:

Data Sovereignty Frameworks: Enabling communities to own their data footprint

Bias Stress Testing: Actively working to degrade models at the edges (e.g., “How might this fail someone in a wheelchair in Manila?”).

Algorithmic Reparations: Prioritizing solutions for those historically left behind first.

The next stage of AI ethics isn’t fairness — it’s justice for the oppressed. And that begins by acknowledging that bias isn’t in the code, it’s in the mirror that we refuse to hold up to ourselves.

Real-World Impacts of AI Bias

The impact of this bias in AI systems is not just theoretical, but forms real obstacles and reinforces inequalities that apply to all areas of life.

On the workplace front, AI-based recruiting tools have been found to bias against women for technical roles, block certain candidates with disabilities, and prefer applicants from certain educational backgrounds or regions. These systems, which are meant to make hiring more efficient and objective, can in fact systematically and automatically systematize and scale existing biases that are hard to detect and even harder to question.

The financial industry has not been immune to such problems, AI-powered loan algorithms that could disadvantage minority borrowers, even when race or ethnicity are specifically barred from consideration. These systems might discover proxy variables that are correlated with a protected characteristic, and as a result, in disparate impacts that are legally troubling and socially harmful.

Healthcare AI provides some particularly worrying examples – here, biased systems may quite literally be a matter of life and death. Poorly performing AI diagnostic tools for some populations could result in misdiagnosis, treatment delay, or inappropriate recommendations for care. Pulse oximeters — devices that measure blood oxygen levels — have proven to be less accurate for patients with darker skin, which could result in missed cases of severe illness.

In law and order, AI applications for risk assessment and precognitive policing have been lambasted for reflecting the racial prejudices found in the historical record of crime and law enforcement. These systems might recommend longer prison sentences for minority defendants or send more police to communities of colour, only to create feedback loops that perpetuate disparities.

The Business Case for Inclusive AI

Apart from the moral and legal aspects, there are strong business cases for why companies ought to prioritize diversity and inclusion in their AI efforts. Biased AI systems pose substantial risks ranging from legal liability, reputational harm, and lost market potential.

From a legal standpoint, that means that companies operating systems that produce discriminatory results can be sued, fined, and held liable for failure to comply with regulations. While governments across the globe look to establish AI governance models, businesses running biased systems could come under the spotlight and face sanctions.

On the reputational front, firms that use biased AI systems risk substantial brand damage and consumer pushback. In an age in which corporate social responsibility is paramount to consumers, employees and investors, AI bias can erode both trust and loyalty.

More fundamentally, biased AI systems are just less effective in serving diverse populations. An AI system that only works well for a sub-sample of potential users is leaving value on the table. Businesses that can produce more inclusive AI solutions can also better address larger markets, discover new opportunities, and produce more robust and generalizable solutions.

It is a fact that diverse teams do better, and that certainly holds true for AI work. These heterogeneous teams of developers will provide different perspectives, experiences, and perceptions to expose possible biases, edge cases, and unintended consequences that a more homogeneous team might overlook.

Technical Solutions to Reduce AI Bias

To tackle the problem of AI bias, a multi-pronged technical solution needs to be considered, spanning the AI development lifecycle from data collection and pre-processing through model training to testing and roll-out.

Diversity and quality of the data are key for equitable AI. This involves proactive efforts to ensure that training data are representative of the populations that the AI system will serve. There is a growing trend of companies investing in the collection of diverse data, synthetic data generation as well as data augmentation to mitigate underrepresentation.

Preprocessing methods can be used to recognize and reduce bias in training data. This involves techniques for identifying the presence of imbalanced representation, eliminating discriminating features and using statistical approaches to minimize the impact of historical biases that are present in the data.

Moreover, when developing the models, fairness-aware machine learning techniques can ensure that AI systems satisfy certain equity specification. This encompasses algorithms for training models subject to fairness constraints, notions of how to measure and optimize for various definitions of fairness, and how to weight accuracy and fairness objectives.

Testing must be more thorough than traditional accuracy checking — a robust evaluation regime catches emerging issues with robust fairness assessments. That means testing AI systems on various demographic groups, measuring disparities, testing for different kinds of bias and discrimination.

Post-deployment monitoring is essential to keep the AI systems fair over time. This includes continued performance tracking over diverse populations, mechanisms to detect bias shift as such systems see new data, and mechanisms to act on discovered fairness issues.

Organization-Level Approaches to Inclusive AI

Technical solutions are necessary but not sufficient to address AI bias. Inclusive AI development, therefore, demands holistic programs that consider the human, process and cultural aspects of this work.

Creating diverse AI teams is critical to building inclusive systems. It’s not just about hiring diverse talent but creating an inclusive culture where diverse voices are valued and heard. Companies are also introducing more targeted hiring, mentorship and retention programs to create more inclusive technical staff.

Interdisciplinary communication is needed to uncover potential bias and ensure that AI systems satisfy the preferences of different stakeholders, and that stretches as far as incorporating domain experts, people from various communities and affected populations in the design of AI itself. Many institutions are putting in place AI ethics boards, bias review committees and community advisory panels to get a broad range of voices at the table on AI projects.

Training and education initiatives help ensure that all team members recognize the importance of AI fairness and possess the ability to detect and mitigate bias. This includes technical training ranging from bias-detection algorithms to broader education about the social and ethical implications of AI systems.

Governance mechanisms establish the structure and the responsibility of inclusive AI development. This includes having well-defined processes and policies for AI development and roles and responsibilities around mitigating bias and ensuring that there’s a clear pipeline and an escalation & resolution process around fairness concerns.

Measuring and Monitoring AI Fairness

Since programs can’t be developed in a vacuum, successful AI diversity and inclusion initiatives need to be supported by strong measurement and monitoring systems. However, the definition of fairness in AI systems is difficult, as there are several reasonable definitions of fairness that might contradict each other.

In terms of individual fairness, the aim is to treat similar individuals in a similar way; group fairness aims to ensure that people from different groups receive fair treatment. Statistical parity would check if equally positive outcomes are assigned for the two groups and equalized odds would ensure that the error rates shouldn’t be different for people based on their group.

Institutions are building out fairness measures that consider several dimensions of fairness and bias. These encompass notions such as demographic parity, equality of opportunity, and calibration checks, which test whether the confidence scores of the AI system are equally well calibrated across all groups.

Ongoing monitoring systems help us watch how AI is working for different groups over time, and help us notice when performance gaps emerge or existing biases inflate. These systems often have alerts in place when fairness metrics dip below acceptable thresholds and dashboards are provided to allow ongoing review of system equity.

The audit provides a systematic assessment of AI systems for bias and discrimination. This could range from internal audits carried out by specialist teams to third-party audits by independent experts or people-led audits that see local communities being included in evaluation work.

Regulatory and Policy Landscape

Regulation regarding AI bias and discrimination is a quickly evolving landscape, and governments around the world are in the process of creating new regulatory structures and governance/accountability guidance around AI.

The EU AI Act contains provisions on bias and discrimination in AI systems, and in particular for high-risk instances of use such as employment, education and law enforcement. The Act mandates that employers conduct testing for bias and adopt remedial steps, and it imposes hefty penalties for non-compliance.

Several federal bodies in the USA are working on AI guidance and regulations. Guidance from the Equal Employment Opportunity Commission on AI in hiring has been released; the Federal Trade Commission has made it clear that anti-discrimination laws apply to AI systems that already exist.

Many states and localities across the United States are developing their own AI governance mechanisms. New York City’s mandate for bias audits of automated employment decision tools is among the first-of-its-kind comprehensive local AI bias regulations in the United States.

Internationally, global AI ethics and governance frameworks are being pursued by entities such as the OECD and UNESCO, which emphasize the significance of fairness, accountability, and human rights in AI deployment & development.

The Path Forward

Creating AI that is truly inclusive requires long-term, sustained investment, effort and prioritization across the tech ecosystem. That includes sustained investment in building ways to identify— and counter—bias, greater attention to addressing diversity and inclusion within the larger tech community, and conversations among tech workers, policy makers, and affected communities.

Education and awareness are crucial in creating awareness about AI bias and promoting best practices. This covers technical education for AI developers, policy education for regulators and educators, and digital literacy for the general public.

Cross-industry collaboration across sectors that contribute to sharing best practices, setting common standards, and creating joint resources can address the problem of bias in AI. Groups like the Partnership on AI and the AI Ethics Initiative are helping to organize those efforts.

Research and development need to focus on advancing the state of the practice in fair AI systems. This includes developing new technical approaches to reduce bias, producing better methods of evaluating these approaches, and increasing our understanding of the complicated feedback loop between AI systems and social equity.

Conclusion

The pressing puzzle of how to construct fair and inclusive AI systems is one of our time’s defining problems. As AI becomes more potent and more pervasive, making sure it benefits us all equitably is not just a technical problem—it is a moral and a social one.

AI bias is not a mere technical error— it is a complex issue that penetrates through human, organisational and system factors. It will require multidisciplinary teams, inclusive processes, strong governance, and unwavering dedication to equity to tackle it.

The stakes are high, but so is the opportunity. By prioritizing diversity and inclusion in the development of AI, we can create systems that do more than just sidestep perpetuating inequalities; they value and work actively towards a fairer society. It is a long-term endeavour — one that requires continued effort and vigilance — yet the benefits it offers to individuals, corporate bodies, and the wider community are indeed life-changing.

What we decide today will determine what role AI plays for generations. By embracing inclusive approaches, nurturing diverse talent, establishing robust governance, and proactively rooting out bias, we can ensure that AI becomes a positive force for equity and justice, resulting in a legacy of technology that lifts up all of us.

Found this article interesting?

1. Follow Dr Andrée Bates LinkedIn Profile Now

Revolutionize your team’s AI solution vendor choice process and unlock unparalleled efficiency and save millions on poor AI vendor choices that are not meeting your needs! Stop wasting precious time sifting through countless vendors and gain instant access to a curated list of top-tier companies, expertly vetted by leading pharma AI experts.

Every year, we rigorously interview thousands of AI companies that tackle pharma challenges head-on. Our comprehensive evaluations cover whether the solution delivers what is needed, their client results, their AI sophistication, cost-benefit ratio, demos, and more. We provide an exclusive, dynamic database, updated weekly, brimming with the best AI vendors for every business unit and challenge. Plus, our cutting-edge AI technology makes searching it by business unit, challenge, vendors or demo videos and information a breeze.

- Discover vendors delivering out-of-the-box AI solutions tailored to your needs.

- Identify the best of the best effortlessly.

Anticipate results with confidence.

Transform your AI strategy with our expertly curated vendors that walk the talk, and stay ahead in the fast-paced world of pharma AI!

Get on the wait list to access this today. Click here.

4. Take our FREE AI for Pharma Assessment

This assessment will score your current leveraging of AI against industry best practice benchmarks, and you’ll receive a report outlining what 4 key areas you can improve on to be successful in transforming your organization or business unit.

Plus receive a free link to our webinar ‘AI in Pharma: Don’t be Left Behind’. Link to assessment here

5. Learn more about AI in Pharma in your own time

We have created an in-depth on-demand training about AI specifically for pharma that translate it into easy understanding of AI and how to apply it in all the different pharma business units — Click here to find out more.