In boardrooms and break rooms alike, generative AI has prompted a profound organizational paradox: great potential against existential anxiety.

Radiologists watch as AI diagnoses tumours in seconds, financial analysts see algorithms predicting market shifts with awe-inspiring precision, and manufacturing teams view defects that human eyes can’t even perceive.

But under this miracle runs an undercurrent of rebellion. This is not simply technological incomprehension; it is a deeply human response to sharing cognitive space with an intelligence we can’t quite understand.

The gap between companies that can’t wait to capitalize on the promise of AI and those paralyzed by fear of implementation is widening each day, with McKinsey forecasting a 60% productivity difference between the leaders and the laggards. The strategic imperative isn’t just deploying generative AI tools, it’s managing the psychological transition from reluctance to competence.

The Fear Factor: Finding Resistance in the Organization

At first glance, reluctance regarding generative AI might seem like a technical challenge, pure and simple – systems that don’t perform or IT that’s missing the necessary infrastructure. In reality, the roots are profoundly human, tied together by three interlocking anxieties:

1. Perceived Skill Gaps

A lot of employees are concerned that they don’t even know how to initiate, develop and implement AI tools into what they do on a daily basis. Picture a veteran project manager who’s never opened a Python notebook — the notion of letting go of any part of his/her workflow to “some black-box algorithm” may come across as a confession that he/she’s behind the curve.

2. Fears of Job Insecurity

Beyond matters of competence, there’s a more gut-level question: “Is this going to replace me?” Even when a tool guarantees to help you write reports faster or never fill out a data entry form again, it raises questions of why should I even care? Professionals in all sorts of fields, from accounting to customer service, halt in their tracks when they try to picture AI silently absorbing the roles they had assumed only they could fill.

3. Ethical Unease

And, ultimately, both executives and staff fret that the system will be biased, breach privacy or make decisions that are opaque. This is not “tech fear,” but a legitimate challenge of governance: How can you guarantee that the recommendation you get from an AI system is fair, defensible and consistent with your organization’s values?

How AI Literacy Contributes to Competitive Advantage

As the competitive-play field becomes increasingly fast-moving, AI expertise has transformed itself from an optional skill to the anchor of strategic competitiveness, a force creating an ever-widening gap between leaders and laggards.

The companies that get generative AI right don’t just automate mundane chores—they supercharge decision velocity, compress innovation cycles and open the door for entirely new business models.

Think about pharmaceutical explorers using AI-powered molecular design to discover drug candidates in a matter of weeks, rather than years, or manufacturers that utilize predictive maintenance to turn capital-intensive downtime into a durable uptime-as-a-service business.

These tools don’t displace human talent; they amplify it — financial analysts with alacrity in prompt engineering bend their highest-order thinking on market strategy, just as customer experience teams re-imagine their role in crafting personalized journeys by smart recommendation engines as by architects.

Most importantly, AI fluency is a self-accelerating journey – every successful application creates proprietary data to fine-tune future models, multiplying benefits over time.

On the other hand, institutions that choose to stay on the sidelines will not just miss out on efficiency gains — they run the risk of becoming irrelevant as competitors redefine industry economics around AI-generated insights.

From healthcare systems that prevent disease with generative diagnostics, to entertainment platforms that shape viewer habits, AI fluency is no longer optional; it is the core competency that will make tomorrow’s market leaders distinct from yesterday’s also-rans.

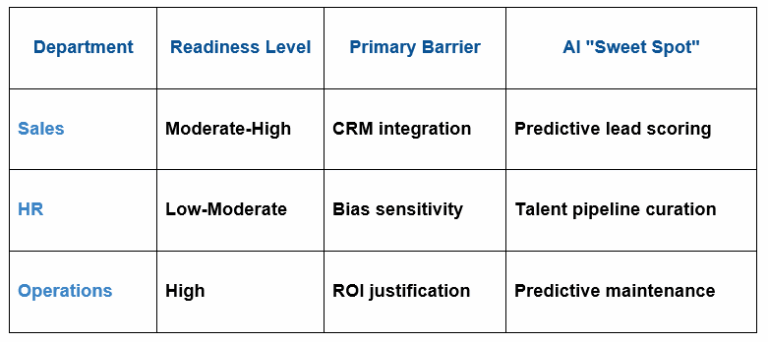

The Readiness Spectrum Map – Customizing Approaches by Role and Department

The readiness of an organization for AI is not binary – it’s a continuum with different roles and departments sitting at vastly different places on the spectrum due to the complexity of workflows, their access to data and the mechanics of value creation.

This fragmentation requires strategic surgical accuracy: What motivates marketing strikes fear into legal, what increases our pace of finance transactions bogs down HR. The root cause of most implementation failures? Blindly imposing “AI adoption” frameworks on fundamentally different operational DNA

Think of three archetypical stages along the readiness spectrum:

1. High-Velocity Functions (Marketing, Innovation)

- Readiness Drivers: Creative iteration needs, rapid prototyping culture, unstructured data abundance

- Specific Strategy: Implement low-tolerance generative tools (such as ChatGPT Enterprise) natively in the flow, for ideation and content writing – but have the process support “creative guardrails” to prevent uniformity.

- Example: Coca-Cola’s marketing team relies on AI for around 60-70% of first-round campaign ideas, though further refining is done by humans who can apply brand narrative and emotional affection.

Adoption Catalyst: Quantify time saved as well as volume of output

2. Governance-Intensive Functions (Legal, Compliance)

- Readiness Barriers: Dependence on accuracy, risk, and regulations

- Tailored Strategy: Use “AI co-pilot” models with chain-of-custody auditing (eg Harvey AI at Allen and Overy), in which algorithms handle precedent research and humans preserve judgment in case strategy. Begin with low-risk use cases such as contract clause extraction, before progressing to deposition prep.

Adoption Catalyst: Position AI as risk management— reduced missed deadlines by 45% at DLA Piper with automated docket tracking.

3. Hybrid Value-Creators (R&D, Product Development)

- Readiness Paradox: High technical skills, but reflexive depth of iteration

- Tailored Strategy: Integrate domain-specific micro-models that add depth within the development lifecycle — generative design in conceptualization (Autodesk Fusion), simulation testing in validation (Ansys), yet maintain human control points at the interfaces.

For example, Siemens Healthineers decreased the cycle for prototypes by deploying AI for component optimization and retaining systems engineers as solution architects.

The Departmental Diagnostic Tool:

The tipping point is when companies stop asking “Are we AI-ready?” and begin asking “Where are we AI-ready?” By laying out the readiness spectrum across role-specific value lenses — marketing requires speed, legal requires precision, engineering requires iterative leverage — leaders can reduce wasted resources in resistant areas while accelerating progress in high-ROI domains. This is not mere optimization, but the art of aligning technological capability with human operational truth.

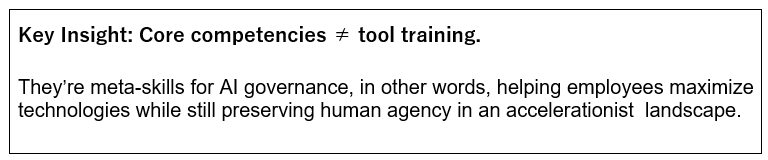

Developing Core Competencies – Essential Skills for Every Employee

The democratization of generative AI will require a foundation skill set that spans departments—a universal “augmentation literacy” that turns each employee from a passive tool user into an active co-creator. This literacy is built upon three non-negotiable pillars: prompt engineering agility, responsible augmentation judgment and ethical co-creation fluency.

Prompt engineering agility is not just about simple command entry — it’s about the art of iterative dialogue sculpting that allows marketing to influence AI from generic drafts to brand-perfect narratives with surgical precision, or HR to extrapolate nuanced talent insights from workforce data through layered questioning.

At Siemens, assembly-line technicians now use structured prompt sequences to diagnose equipment errors 25% faster, turning vague symptom descriptions into actionable repair protocols.

Critical augmentation judgment is now the new organizational immune system—training employees to question the outputs of AI for biases, missing context, or simple flaws in logic.

At JPMorgan Chase, financial analysts do this via “algorithmic stress-testing,” which means AI market predictions receive forensic scrutiny versus historical anomalies before determining actions. Similarly, marketing teams at Unilever employ bias-detection frameworks to audit AI-generated campaign concepts for cultural blind spots.

Ethical co-creation fluency transforms compliance thing to collective ownership. When the pharmaceutical researchers at Roche deploy generative AI to design molecules, they traverse the gray areas of patents via “innovation ethics workshops” — learning to distinguish derivative work from real invention.

This trio is a dynamic base, not fixed skills but muscle memory to evolve and mutate. As the power of AI advances, workers rooted in these principles don’t just keep pace; they largely design their own augmentation, turning disruption into sustainable competitive advantage.

Creating Psychological Safety – Normalizing AI Experimentation

In the fast-paced technology environment we find ourselves in today, establishing this kind of psychological safety around AI experimentation is no longer a nice-to-have—it’s a must-have to unlock its most powerful form of innovation.

Setting a culture where every employee is empowered to experiment and iterate without fear of retribution promotes risk-taking and fast learning. When teams realize that setbacks are considered critical data points rather than personal failures, they are more likely to take risks and innovate with new AI applications, ranging from improving predictive models to rethinking what customer engagement can be. Instead, this culture turns AI innovation from a top-down directive into a thriving grassroots creative engine, where insights can spring from any part of the organization.

For example, if they are pitched as a series of experiments, rather than done-and-dusted tests, those initial AI trials can help instil a culture in which constant improvement becomes the default, and where every experiment, success or failure, creates a bank of strategic knowledge.

Leaders can also reinforce the approach by encouraging transparency, creating safe spaces for reflective post-mortems, and aligning incentives to reward iteration rather than victory. By doing so, organizations not only increase their collective ability to put AI to work for competitive advantage but also weave resilience into the fabric of the enterprise, so that everybody, not just a few, can navigate the uncertainties of the digital frontier with confidence and agility.

The Vital Role of Leadership – Leading the Culture Change

In the age of AI proliferation, a different kind of leadership is necessary to drive a seismic shift in culture to embrace and exploit generative AI to win. Pioneering leaders know that their job is not the standard one of management; it is building a culture where safe testing is the norm, shared learning is celebrated, and failures are reframed as enablers of innovation. Through the open sharing of their learning curves and even vulnerabilities, such as admitting early on to AI implementation mistakes or participating in “failure labs” to dissect failures, leaders undermine the stigma of taking risks. They build trust by putting into place open and transparent feedback loops and dedicated innovation forums so that everyone can ideate, test, and iterate with AI tools. Such a visible commitment to adaptive learning elegantly bridges an organization’s strategic intent and its operating behaviour, turning opposition into elastic power.

For instance, when leadership demonstrates a spirit of continuous improvement and offers mentorship programs around AI expertise, employees become more likely to experiment and absorb new best practices, driving their organizations closer to full AI fluency.

In the end, it is the leadership that advocates for cultural change, which is the lever on which a competitive generative AI-enabled enterprise swings, pivoting potential disruption into common strategic advantage.

Scaling Fluency: Move from Silos to Integration Across the Organization

Facing the fast-evolving digital reality of today, the ability to scale AI fluency means that it is necessary to go beyond departmental silos and develop a unified, cross-organization innovation ecosystem. This shift turns on dissolving the walls that tend to segregate expertise, knowledge, and experimentation into silos.

Leaders need to choreograph vigorous cross-functional cooperation, setting up common platforms, communities of practice, and centralized knowledge hubs that will allow each team to take on and take up AI strategies coherently.

For example, companies such as Google and Microsoft have overcome these disconnects by propelling AI from pilots to every aspect of the enterprise with internal AI ambassador programs and integrated training programs that diffuse leading-edge practices across the company. They align incentives, share best practices and use agile feedback loops to create AI fluency as an integrated organizational capability, going beyond the often fragmented experiments in gyms rather than factories.

This all-encompassing integration not only 10Xs the success of each department but spurs on collective intelligence, where AI-based insights and innovations are relentlessly repeated and re-amplified, turning discrete victories into a consistent strategic advantage.

Workforce Empowerment

In the era of generative AI, it is more than a mere efficiency tool: it’s a transformative force that recasts career arcs and powers professional metamorphosis. AI frees employees from performing monotonous tasks and instead provides them with profound insights for better decision making and insights for the organization, enabling them to move beyond merely acting as operators and become strategic innovators.

This shift offers two-fold benefits: on one hand, companies stand to unlock orders-of-magnitude increases in productivity, on the other, individuals have this incredible opportunity for lifelong development and skill diversification. Progressive companies are driving a holistic up-skilling strategy–comprising immersive, micro-learning platforms, AI-fueled mentorship programs and cross-functional innovation labs–so every employee can leverage these intelligent tools.

For instance, organizations that adopt approaches like these have seen workers move into hybrid functions that merge technical knowledge with creative problem-solving skills, creating places where lifelong learning isn’t a supplement, but the hallmark of what defines career success.

By making AI fluency something a broad range of employees can attain and weaving it into the substrate of professional development, organizations are not just hedgeing against the future; they are ushering in a renaissance of the workforce in which human and machine combine forces to accelerate individual excellence and collective invention.

Success Metrics – Beyond the Efficient Use Case to the Strategic Impact

Real impact from AI implementation is not just better performance in the traditional sense but transformation across a number of organizational capacities and strategic imperatives. Metrics in this paradigm need to shift from measuring process efficiencies to measuring the pull-through of augmented decision-making, cross-functional collaboration, and new revenue insights.

For instance, leading-edge businesses are now using “augmentation indices” to track progress in leadership agility and innovation velocity, measures of how AI-driven insights have transformed business models and speed up market reactions.

At the same time, companies are plotting the growth of hybrid roles in which human creativity and AI algorithms are combined, to dramatic effect in terms of increased high-value contributions. When AI investments are framed in the context of strategic factors like market presence or adaptability, or even organizational agility, the emphasis is no longer on Excel-like process optimization but, rather, on competitive advantage that is fundamentally transformative.

This holistic perspective not only substantiates technology adoption by means of attributable performance lifts but also illustrates the fact that it is the backbone of a durable, strategic impact and growth sustainability.

Ethics by Design – Developing Trustworthy AI Systems

In a world where generative AI is embedded throughout business and society, trust is the ultimate value proposition — and it carries a price tag. It can only be earned through “Ethics by Design” and the intentional infusion of fairness, transparency, accountability and privacy into the development of systems.

Replace an ad-hoc approach to ethics as a compliance afterthought by integrating ethical guardrails into development pipelines; Google PAIR (People + AI Research) team is co-designing prototypes with end users to surface unintended harms before deployment; IBM’s AI Ethics Board mandates bias-mitigation toolkits (e.g. AI Fairness 360) resulting in a verified 18% reduction in discriminatory outcomes in credit-scoring models; and Microsoft’s Responsible AI Standard formalizes privacy-by-design practices so that differential privacy techniques mask individual data while preserving aggregate statistics.

This proactive stance is based on 3 pillars:

1) Principled Frameworks – Adopting IEEE P7000 series standards or risk assessment protocols from the EU AI Act to rank systems according to the harm they may cause.

2) Continuous Auditing – Real-time monitoring dashboards for fairness KPIs (e.g., demographic parity, equalized odds), with automated alerts on drift exceeding programmable thresholds; and

3) Stakeholder Co-Governance – Multidisciplinary ethics councils and public review boards that appreciate trade-offs between utility and risk, such as Salesforce’s “Ethics in AI” advisory panels.

The strategic return is significant: companies that make ethics by design a priority are not only ahead of regulation, fines and public backlash, but are accepted by, and adopted in, more communities.

Ultimately, as businesses incorporate ethical thinking into the very fabric of system architecture, they will differentiate AI from a purely alarming existential threat to a sustainable competitive opportunity, where AI is ethical intelligence that drives faster innovation, preserves human dignity, and ensures long-term stakeholder loyalty.

Conclusion

AI fluency is not a one-time effort; it’s a continuous journey powered by courageous leadership, effective collaboration, strategic evaluation and deliberate ethics.

To enact that evolution, executives will need to:

- Generalize pilots into company-wide capabilities.

- Motivate the team to do more than what is in their job description.

- Swap efficiency measures for strategic vision and finance continuous upskilling efforts.

- Use balanced scorecards to track decision-making and opportunity gain.

Set up principles for morality to build trust.

Once AI becomes the permanent cultural force that facilitates adaptability, responsible risk-taking, and human dignity – your organization won’t just weather disruption; it will lead the way to the future.

Found this article interesting?

1. Follow Dr Andrée Bates LinkedIn Profile Now

Revolutionize your team’s AI solution vendor choice process and unlock unparalleled efficiency and save millions on poor AI vendor choices that are not meeting your needs! Stop wasting precious time sifting through countless vendors and gain instant access to a curated list of top-tier companies, expertly vetted by leading pharma AI experts.

Every year, we rigorously interview thousands of AI companies that tackle pharma challenges head-on. Our comprehensive evaluations cover whether the solution delivers what is needed, their client results, their AI sophistication, cost-benefit ratio, demos, and more. We provide an exclusive, dynamic database, updated weekly, brimming with the best AI vendors for every business unit and challenge. Plus, our cutting-edge AI technology makes searching it by business unit, challenge, vendors or demo videos and information a breeze.

- Discover vendors delivering out-of-the-box AI solutions tailored to your needs.

- Identify the best of the best effortlessly.

Anticipate results with confidence.

Transform your AI strategy with our expertly curated vendors that walk the talk, and stay ahead in the fast-paced world of pharma AI!

Get on the wait list to access this today. Click here.

4. Take our FREE AI for Pharma Assessment

This assessment will score your current leveraging of AI against industry best practice benchmarks, and you’ll receive a report outlining what 4 key areas you can improve on to be successful in transforming your organization or business unit.

Plus receive a free link to our webinar ‘AI in Pharma: Don’t be Left Behind’. Link to assessment here

5. Learn more about AI in Pharma in your own time

We have created an in-depth on-demand training about AI specifically for pharma that translate it into easy understanding of AI and how to apply it in all the different pharma business units — Click here to find out more.